How to install Rancher On K3s HA cluster

K3s is a light weight kubernetes distributen that is built for IoT & Edge computing. in this article we show how to install Rancher On K3s HA cluster

Here is our environment:

VMs:

Control Plane 1:

OS: Ubuntu server 20.04.2

CPU: 4 core

RAM: 8 GB

Disk: 20 GB

Filesystem: ext4

IP Address: 172.24.7.17

Control Plane 2:

OS: Ubuntu server 20.04.2

CPU: 4 core

RAM: 8 GB

Disk: 20 GB

Filesystem: ext4

IP Address: 172.24.7.18

Control Plane 3:

OS: Ubuntu server 20.04.2

CPU: 4 core

RAM: 8 GB

Disk: 20 GB

Filesystem: ext4

IP Address: 172.24.7.19

Keepalived Floating IP: 172.24.7.20

1- Setup MariaDB cluster

MariaDB Galera Cluster is a synchronous multi-master cluster for MariaDB with support for XtraDB/InnoDB storage engines. It has the following top features.

ProxySQL is a MySQL proxy server that used as an intermediary between the Galera cluster and the applications trying to access the cluster.

1.1 Update servers

Update the servers and reboot

sudo apt update && sudo apt -y upgrade sudo reboot

1.2 Setup Hostnames

Configure static hostnames on each of the three servers for DNS reachability:

sudo cat >> /etc/hosts << EOF 172.24.7.17 controller1.tuxtips.net node1 172.24.7.18 controller2.tuxtips.net node2 172.24.7.19 controller3.tuxtips.net node3 EOF

1.3 Install MariaDB on all nodes

Install the latest version of MariaDB with the commands below:

sudo apt update sudo apt -y install mariadb-server mariadb-client

Configure MariaDB for first use by running the command below, then run through the configuration appropriately.

sudo mysql_secure_installation

NOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MariaDB SERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY! In order to log into MariaDB to secure it, we'll need the current password for the root user. If you've just installed MariaDB, and you haven't set the root password yet, the password will be blank, so you should just press enter here. Enter current password for root (enter for none): OK, successfully used password, moving on... Setting the root password ensures that nobody can log into the MariaDB root user without the proper authorisation. Set root password? [Y/n] y New password: Re-enter new password: Password updated successfully! Reloading privilege tables.. ... Success! By default, a MariaDB installation has an anonymous user, allowing anyone to log into MariaDB without having to have a user account created for them. This is intended only for testing, and to make the installation go a bit smoother. You should remove them before moving into a production environment. Remove anonymous users? [Y/n] y ... Success! Normally, root should only be allowed to connect from 'localhost'. This ensures that someone cannot guess at the root password from the network. Disallow root login remotely? [Y/n] y ... Success! By default, MariaDB comes with a database named 'test' that anyone can access. This is also intended only for testing, and should be removed before moving into a production environment. Remove test database and access to it? [Y/n] y - Dropping test database... ... Success! - Removing privileges on test database... ... Success! Reloading the privilege tables will ensure that all changes made so far will take effect immediately. Reload privilege tables now? [Y/n] y ... Success! Cleaning up... All done! If you've completed all of the above steps, your MariaDB installation should now be secure. Thanks for using MariaDB!

Test connection to each of the databases by running the command below:

mysql -u root -p

You will be prompted to input a password that you had setup in the previous step.

1.4 Configure Galera Cluster

The next step is to configure galera cluster on our three MariaDB hosts. Comment the bind line on the file /etc/mysql/mariadb.conf.d/50-server.cnf which binds MariaDB service to 127.0.0.1

$ sudo sed -i 's/bind-address = 127.0.0.1/#bind-address = 127.0.0.1/g' /etc/mysql/mariadb.conf.d/50-server.cnf

1.4.1 Configure First Node

Add the following content to the MariaDB configuration file. Remember to modify the hostname at “wsrep_node_address” to the hostname or IP of your first host.

sudo cat >> /etc/mysql/mariadb.conf.d/50-server.cnf << EOF [galera] wsrep_on = ON wsrep_cluster_name = "MariaDB Galera Cluster" wsrep_provider = /usr/lib/galera/libgalera_smm.so wsrep_cluster_address = "gcomm://" binlog_format = row default_storage_engine = InnoDB innodb_autoinc_lock_mode = 2 bind-address = 0.0.0.0 wsrep_node_address="node1" EOF

Initialize galera cluster and restart MariaDB

sudo galera_new_cluster

1.4.2 Configure Galera nodes (node2 & node3)

Add the following configuration for node2 and node3 respectively:

Node2:

sudo cat >> /etc/mysql/mariadb.conf.d/50-server.cnf << EOF [galera] wsrep_on = ON wsrep_cluster_name = "MariaDB Galera Cluster" wsrep_provider = /usr/lib/galera/libgalera_smm.so wsrep_cluster_address = "gcomm://node1,node2,node3" binlog_format = row default_storage_engine = InnoDB innodb_autoinc_lock_mode = 2 bind-address = 0.0.0.0 wsrep_node_address="node2" EOF

Node3:

sudo cat >> /etc/mysql/mariadb.conf.d/50-server.cnf << EOF [galera] wsrep_on = ON wsrep_cluster_name = "MariaDB Galera Cluster" wsrep_provider = /usr/lib/galera/libgalera_smm.so wsrep_cluster_address = "gcomm://node1,node2,node3" binlog_format = row default_storage_engine = InnoDB innodb_autoinc_lock_mode = 2 bind-address = 0.0.0.0 wsrep_node_address="node3" EOF

Restart MariaDB service on node2 and node3

sudo systemctl restart mariadb

1.4.3 Validate Galera Settings

Login to any of the three nodes as the root user, then confirm that the cluster settings are OK.

sudo mysql -u root -p

Enter password: Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 35 Server version: 10.5.9-MariaDB-1:10.5.9+maria~focal mariadb.org binary distribution Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]>

Check status

MariaDB [(none)]> show status like 'wsrep_%'; +-------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------+ | Variable_name | Value | +-------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------+ | wsrep_local_state_uuid | c2e29a95-a81f-11eb-a1fc-237e9071c44f | | wsrep_protocol_version | 10 | | wsrep_last_committed | 7 | | wsrep_replicated | 0 | | wsrep_replicated_bytes | 0 | | wsrep_repl_keys | 0 | | wsrep_repl_keys_bytes | 0 | | wsrep_repl_data_bytes | 0 | | wsrep_repl_other_bytes | 0 | | wsrep_received | 7 | | wsrep_received_bytes | 690 | | wsrep_local_commits | 0 | | wsrep_local_cert_failures | 0 | | wsrep_local_replays | 0 | | wsrep_local_send_queue | 0 | | wsrep_local_send_queue_max | 1 | | wsrep_local_send_queue_min | 0 | | wsrep_local_send_queue_avg | 0 | | wsrep_local_recv_queue | 0 | | wsrep_local_recv_queue_max | 2 | | wsrep_local_recv_queue_min | 0 | | wsrep_local_recv_queue_avg | 0.142857 | | wsrep_local_cached_downto | 1 | | wsrep_flow_control_paused_ns | 0 | | wsrep_flow_control_paused | 0 | | wsrep_flow_control_sent | 0 | | wsrep_flow_control_recv | 0 | | wsrep_flow_control_active | false | | wsrep_flow_control_requested | false | | wsrep_cert_deps_distance | 0 | | wsrep_apply_oooe | 0 | | wsrep_apply_oool | 0 | | wsrep_apply_window | 0 | | wsrep_commit_oooe | 0 | | wsrep_commit_oool | 0 | | wsrep_commit_window | 0 | | wsrep_local_state | 4 | | wsrep_local_state_comment | Synced | | wsrep_cert_index_size | 0 | | wsrep_causal_reads | 0 | | wsrep_cert_interval | 0 | | wsrep_open_transactions | 0 | | wsrep_open_connections | 0 | | wsrep_incoming_addresses | AUTO,AUTO,AUTO | | wsrep_cluster_weight | 3 | | wsrep_desync_count | 0 | | wsrep_evs_delayed | | | wsrep_evs_evict_list | | | wsrep_evs_repl_latency | 0/0/0/0/0 | | wsrep_evs_state | OPERATIONAL | | wsrep_gcomm_uuid | e4af838d-a824-11eb-95d0-72f816b84c68 | | wsrep_gmcast_segment | 0 | | wsrep_applier_thread_count | 1 | | wsrep_cluster_capabilities | | | wsrep_cluster_conf_id | 3 | | wsrep_cluster_size | 3 | | wsrep_cluster_state_uuid | c2e29a95-a81f-11eb-a1fc-237e9071c44f | | wsrep_cluster_status | Primary | | wsrep_connected | ON | | wsrep_local_bf_aborts | 0 | | wsrep_local_index | 2 | | wsrep_provider_capabilities | :MULTI_MASTER:CERTIFICATION:PARALLEL_APPLYING:TRX_REPLAY:ISOLATION:PAUSE:CAUSAL_READS:INCREMENTAL_WRITESET:UNORDERED:PREORDERED:STREAMING:NBO: | | wsrep_provider_name | Galera | | wsrep_provider_vendor | Codership Oy <[email protected]> | | wsrep_provider_version | 4.7(ree4f10fc) | | wsrep_ready | ON | | wsrep_rollbacker_thread_count | 1 | | wsrep_thread_count | 2 | +-------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------+ 68 rows in set (0.002 sec) MariaDB [(none)]>

Confirm that we have a cluster size of 3 under:

wsrep_cluster_size 3

We can create a test database on any of the nodes and check its availability on the other nodes.

root@node1:~# sudo mysql -u root -p Enter password: Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 49 Server version: 10.5.9-MariaDB-1:10.5.9+maria~focal mariadb.org binary distribution Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> create database k3s; Query OK, 1 row affected (0.003 sec)

On node2 and node3

root@node2:~# sudo mysql -u root -p Enter password: Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 45 Server version: 10.5.9-MariaDB-1:10.5.9+maria~focal mariadb.org binary distribution Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | performance_schema | | k3s | +--------------------+ 4 rows in set (0.001 sec) root@node3:~# sudo mysql -u root -p Enter password: Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 46 Server version: 10.5.9-MariaDB-1:10.5.9+maria~focal mariadb.org binary distribution Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | performance_schema | | k3s | +--------------------+ 4 rows in set (0.001 sec)

This confirms that the database created on node1 is replicated across the cluster.

1.4.4 Secure cluster with SSL

To fully secure all cluster communication we must SSL-encrypt replication traffic within Galera Cluster, State Snapshot Transfer and traffic between database server and client.

Stop Mariadb On controller03, then on controller02 and finally on controller01

We will create SSL Certificates and Keys using openssl.

Create new folder for certificates:

sudo mkdir -p /etc/mysql/ssl sudo cd /etc/mysql/ssl

Generate CA key:

sudo openssl genrsa 4096 > ca-key.pem

Using the CA key, generate the CA certificate

sudo openssl req -new -x509 -nodes -days 3600 -key ca-key.pem -out ca-cert.pem

----- Country Name (2 letter code) [AU]:US State or Province Name (full name) [Some-State]:WA Locality Name (eg, city) []:Los angeles Organization Name (eg, company) [Internet Widgits Pty Ltd]:tuxtips Organizational Unit Name (eg, section) []:DC Common Name (e.g. server FQDN or YOUR name) []:tuxtips.net Email Address []:

Create the server key:

sudo openssl req -newkey rsa:2048 -days 3600 -nodes -keyout server-key.pem -out server-req.pem

Create server certificate, remove passphrase, and sign it

----- Country Name (2 letter code) [AU]:US State or Province Name (full name) [Some-State]:WA Locality Name (eg, city) []:Los angeles Organization Name (eg, company) [Internet Widgits Pty Ltd]:tuxtips Organizational Unit Name (eg, section) []:DC

Use the “tuxtips.net” only on the first certificate:

Common Name (e.g. server FQDN or YOUR name) []:controller01.tuxtips.net Email Address []:

Please enter the following ‘extra’ attributes to be sent with your certificate request:

A challenge password []: An optional company name []:

Process the server RSA key:

sudo openssl rsa -in server-key.pem -out server-key.pem

Sign the server certificate:

sudo openssl x509 -req -in server-req.pem -days 3600 -CA ca-cert.pem -CAkey ca-key.pem -set_serial 01 -out server-cert.pem

Create the client key:

sudo openssl req -newkey rsa:2048 -days 3600 -nodes -keyout client-key.pem -out client-req.pem

----- Country Name (2 letter code) [AU]:US State or Province Name (full name) [Some-State]:WA Locality Name (eg, city) []:Los angeles Organization Name (eg, company) [Internet Widgits Pty Ltd]:tuxtips Organizational Unit Name (eg, section) []:DC Common Name (e.g. server FQDN or YOUR name) []:controller1 Email Address []:[email protected] Please enter the following 'extra' attributes to be sent with your certificate request # A challenge password []: # An optional company name []:

Process client RSA key:

sudo openssl rsa -in client-key.pem -out client-key.pem

Sign the client certificate:

sudo openssl x509 -req -in client-req.pem -days 3600 -CA ca-cert.pem -CAkey ca-key.pem -set_serial 01 -out client-cert.pem

Verify certificates:

sudo openssl verify -CAfile ca-cert.pem server-cert.pem client-cert.pem server-cert.pem: OK client-cert.pem: OK

If verification succeeds copy certificates to all nodes in the cluster.

Set mysql as owner of the files.

Copy:

sudo scp -r /etc/mysql/ssl controller2:/etc/mysql sudo scp -r /etc/mysql/ssl controller3:/etc/mysql

Change owner of ssl files on ALL nodes:

sudo chown -R mysql:mysql /etc/mysql/ssl

Secure database and client connections.

Add following lines in configuration file of ALL DB servers:

sudo cat >> /etc/mysql/my.cnf << EOF [mysqld] ssl-ca=/etc/mysql/ssl/ca-cert.pem ssl-cert=/etc/mysql/ssl/server-cert.pem ssl-key=/etc/mysql/ssl/server-key.pem [client] ssl-ca=/etc/mysql/ssl/ca-cert.pem ssl-cert=/etc/mysql/ssl/client-cert.pem ssl-key=/etc/mysql/ssl/client-key.pem EOF

Define paths to the key, certificate and certificate authority files. Galera Cluster will use this files for encrypting and decrypting replication traffic.

put this in /etc/mysql/mariadb.conf.d/50-server.cnf

sudo cat >> /etc/mysql/mariadb.conf.d/50-server.cnf << EOF wsrep_provider_options="socket.ssl_key=/etc/mysql/ssl/server-key.pem;socket.ssl_cert=/etc/mysql/ssl/server-cert.pem;socket.ssl_ca=/etc/mysql/ssl/ca-cert.pem;socket.checksum=2;socket.ssl_cipher=AES128-SHA256" EOF

Now we must recreate whole cluster.

Start mariadb on controller01:

sudo galera_new_cluster

then on controller02 and controller03 start mariadb normally:

sudo systemctl start mariadb

Now we check db status:

root@node1:~# sudo mysql -u root -p Enter password: Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 49 Server version: 10.5.9-MariaDB-1:10.5.9+maria~focal mariadb.org binary distribution Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> SHOW VARIABLES LIKE 'have_ssl'; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | have_ssl | YES | +---------------+-------+ 1 row in set (0.01 sec)

This confirms that the connection between nodes in cluster has beem secured with SSL.

2- Setup ProxySQL

With a working Galera cluster, we need to setup a ProxySQL server that will distribute traffic to the three nodes equally. ProxySQL can run on the server that has the application or run as an independent server.

2.1 Add ProxySQL repository:

sudo apt install -y lsb-release wget -O - 'https://repo.proxysql.com/ProxySQL/repo_pub_key' | sudo apt-key add - echo deb https://repo.proxysql.com/ProxySQL/proxysql-2.1.x/$(lsb_release -sc)/ ./ | tee /etc/apt/sources.list.d/proxysql.list

2.2 Install ProxySQL:

sudo apt update sudo apt install proxysql mysql-client

After a successful installation, start and enable the service on your host.

sudo systemctl enable --now proxysql

The next step is to configure ProxySQL through admin interface. The admin interface allows you to save configuration without restarting the proxy. This is achieved through SQL queries to the admin database.

To connect to ProxySQL admin interface, we need a mysql-client. The admin interface runs locally on port 6032 and the default username/password is admin/admin.

mysql -u admin -padmin -h 127.0.0.1 -P6032 --prompt='Admin> '

root@proxy:~# mysql -u admin -padmin -h 127.0.0.1 -P6032 --prompt='Admin> ' mysql: [Warning] Using a password on the command line interface can be insecure. Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 1 Server version: 5.5.30 (ProxySQL Admin Module) Copyright (c) 2000, 2021, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. Admin>

Change the default password for security reasons:

UPDATE global_variables SET variable_value='admin:Y0urP@ssw0rd' WHERE variable_name='admin-admin_credentials';

Remember to replace “Y0urP@ssw0rd” with a strong password of your choice.

ProxySQL configuration system consists of three layers:

Memory – Altered when making modifications on the command-line

Disk – used for persistent configuration changes

Runtime – Used as the effective configuration for ProxySQL.

This consequently means that the query above has only been written to memory. To make it persistent, we need to copy the configuration to runtime then save them to disk.

To do that, run the queries below:

LOAD ADMIN VARIABLES TO RUNTIME; SAVE ADMIN VARIABLES TO DISK;

2.3 Configure Monitoring in Galera cluster

ProxySQL needs to communicate with the MariaDB nodes in the Galera cluster to know their health status. This means that ProxySQL has to connect to the nodes through a dedicated user.

We will create a user on one of the MariaDB nodes, the user will be replicated automatically through the cluster since the cluster is already up and running.

MariaDB [(none)]> CREATE USER 'monitor'@'%' IDENTIFIED BY 'm0n1toRp@ssw0d'; MariaDB [(none)]> flush privileges;

Modify the password to a password of your preference.

2.4 Configure Monitoring In ProxySQL

Configure ProxySQL admin to constantly monitor the backend nodes.

Add the user credentials that we configured in the step above. Remember to modify the value for password to fit whatever you have used in the previous step.

ProxySQL Admin> UPDATE global_variables SET variable_value='monitor' WHERE variable_name='mysql-monitor_username'; ProxySQL Admin> UPDATE global_variables SET variable_value='m0n1toRp@ssw0d' WHERE variable_name='mysql-monitor_password';

Add the following monitoring parameters for intervals:

ProxySQL Admin> UPDATE global_variables SET variable_value='2000' WHERE variable_name IN ('mysql-monitor_connect_interval','mysql-monitor_ping_interval','mysql-monitor_read_only_interval');Confirm the variables we just configured in the above step:

Admin> SELECT * FROM global_variables WHERE variable_name LIKE 'mysql-monitor_%'; +--------------------------------------------------------------+----------------+ | variable_name | variable_value | +--------------------------------------------------------------+----------------+ | mysql-monitor_enabled | true | | mysql-monitor_connect_timeout | 600 | | mysql-monitor_ping_max_failures | 3 | | mysql-monitor_ping_timeout | 1000 | | mysql-monitor_read_only_max_timeout_count | 3 | | mysql-monitor_replication_lag_interval | 10000 | | mysql-monitor_replication_lag_timeout | 1000 | | mysql-monitor_replication_lag_count | 1 | | mysql-monitor_groupreplication_healthcheck_interval | 5000 | | mysql-monitor_groupreplication_healthcheck_timeout | 800 | | mysql-monitor_groupreplication_healthcheck_max_timeout_count | 3 | | mysql-monitor_groupreplication_max_transactions_behind_count | 3 | | mysql-monitor_galera_healthcheck_interval | 5000 | | mysql-monitor_galera_healthcheck_timeout | 800 | | mysql-monitor_galera_healthcheck_max_timeout_count | 3 | | mysql-monitor_replication_lag_use_percona_heartbeat | | | mysql-monitor_query_interval | 60000 | | mysql-monitor_query_timeout | 100 | | mysql-monitor_slave_lag_when_null | 60 | | mysql-monitor_threads_min | 8 | | mysql-monitor_threads_max | 128 | | mysql-monitor_threads_queue_maxsize | 128 | | mysql-monitor_wait_timeout | true | | mysql-monitor_writer_is_also_reader | true | | mysql-monitor_username | monitor | | mysql-monitor_password | m0n1toRp@ssw0d | | mysql-monitor_history | 600000 | | mysql-monitor_connect_interval | 2000 | | mysql-monitor_ping_interval | 2000 | | mysql-monitor_read_only_interval | 2000 | | mysql-monitor_read_only_timeout | 500 | +--------------------------------------------------------------+----------------+ 31 rows in set (0.00 sec)

Save changes to disk:

Admin> LOAD MYSQL VARIABLES TO RUNTIME; Query OK, 0 rows affected (0.00 sec) Admin> SAVE MYSQL VARIABLES TO DISK; Query OK, 140 rows affected (0.01 sec)

2.5 Add Backend nodes

The next step is to add the three MariaDB nodes that exist in our Galera cluster. ProxySQL used host groups to categorize the backend nodes. A host group is a set of nodes identified by a positive number e.g. 1 or 2. The purpose of having host groups is to help ProxySQL route queries to different sets of hosts using ProxySQL query routing.

ProxySQL has the following logical host groups:

Writers – these are MySQL nodes that can accept queries that can write/change data – Primary nodes.

Readers – Nodes that can only accept read queries – Slaves nodes.

We will assign the following host group IDs to the above hostgroups:

Writers – 1, readers – 2. Writers are also readers by default.

Configure the table mysql_replication_hostgroup in the main database and specify the reader and writer hostgroups.

SHOW CREATE TABLE main.mysql_replication_hostgroups\G Admin> INSERT INTO main.mysql_replication_hostgroups (writer_hostgroup,reader_hostgroup,comment) VALUES (1,2,'galera_cluster');

Add the Galera cluster nodes:

INSERT INTO main.mysql_servers(hostgroup_id,hostname,port) VALUES (1,'172.24.7.17',3306); INSERT INTO main.mysql_servers(hostgroup_id,hostname,port) VALUES (1,'172.24.7.18',3306); INSERT INTO main.mysql_servers(hostgroup_id,hostname,port) VALUES (1,'172.24.7.19',3306);

Save changes to disk;

LOAD MYSQL SERVERS TO RUNTIME; SAVE MYSQL SERVERS TO DISK;

Confirm that the servers are reachable:

Admin> SELECT * FROM monitor.mysql_server_connect_log ORDER BY time_start_us DESC LIMIT 3; +--------------+------+------------------+-------------------------+---------------+ | hostname | port | time_start_us | connect_success_time_us | connect_error | +--------------+------+------------------+-------------------------+---------------+ | 172.24.7.17 | 3306 | 1619703478153182 | 1062 | NULL | | 172.24.7.18 | 3306 | 1619703478130560 | 923 | NULL | | 172.24.7.19 | 3306 | 1619703478108016 | 984 | NULL | +--------------+------+------------------+-------------------------+---------------+ 3 rows in set (0.00 sec) Admin> SELECT * FROM monitor.mysql_server_ping_log ORDER BY time_start_us DESC LIMIT 3; +--------------+------+------------------+----------------------+------------+ | hostname | port | time_start_us | ping_success_time_us | ping_error | +--------------+------+------------------+----------------------+------------+ | 172.24.7.17 | 3306 | 1619703506146573 | 358 | NULL | | 172.24.7.18 | 3306 | 1619703506123187 | 431 | NULL | | 172.24.7.19 | 3306 | 1619703504166074 | 253 | NULL | +--------------+------+------------------+----------------------+------------+ 3 rows in set (0.00 sec) Admin>

2.6 Create MySQL users

The last step is to create MySQL users that will be connecting to the cluster through the ProxySQL instance.

Create a MySQL user on one of the nodes on galera cluster that users will use to connect.

MariaDB [(none)]> create user 'proxysql'@'%' identified by 'testpassword';

Assingn the neccessary roles to the user, e.g access to a certain database.

MariaDB [(none)]> grant all privileges on k3s.* to 'proxysql'@'%' identified by 'testpassword' with grant option; MariaDB [(none)]> flush privileges;

Create remote user on ProxySQL Admin

This is done by adding entries in the mysql_users table in the main database.

Admin> SHOW CREATE TABLE mysql_users\G *************************** 1. row *************************** table: mysql_users Create Table: CREATE TABLE mysql_users ( username VARCHAR NOT NULL, password VARCHAR, active INT CHECK (active IN (0,1)) NOT NULL DEFAULT 1, use_ssl INT CHECK (use_ssl IN (0,1)) NOT NULL DEFAULT 0, default_hostgroup INT NOT NULL DEFAULT 0, default_schema VARCHAR, schema_locked INT CHECK (schema_locked IN (0,1)) NOT NULL DEFAULT 0, transaction_persistent INT CHECK (transaction_persistent IN (0,1)) NOT NULL DEFAULT 1, fast_forward INT CHECK (fast_forward IN (0,1)) NOT NULL DEFAULT 0, backend INT CHECK (backend IN (0,1)) NOT NULL DEFAULT 1, frontend INT CHECK (frontend IN (0,1)) NOT NULL DEFAULT 1, max_connections INT CHECK (max_connections >=0) NOT NULL DEFAULT 10000, attributes VARCHAR CHECK (JSON_VALID(attributes) OR attributes = '') NOT NULL DEFAULT '', comment VARCHAR NOT NULL DEFAULT '', PRIMARY KEY (username, backend), UNIQUE (username, frontend)) 1 row in set (0.00 sec)

The table is usually empty and users are added by modifying the table. You specify the username,password and default hostgroup.

Admin> INSERT INTO mysql_users(username,password,default_hostgroup) VALUES ('proxysql','testpassword',1);

SELECT * FROM mysql_users;Save changes:

LOAD MYSQL USERS TO RUNTIME; SAVE MYSQL USERS TO DISK;

2.7 Test client connection

ProxySQL client runs on port 6033. We can try connecting to the proxy client using the user we created on galera and proxysql.

root@proxy:~# mysql -uproxysql -h 127.0.0.1 -P6033 -p Enter password: Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 17 Server version: 5.5.30 (ProxySQL) Copyright (c) 2000, 2021, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql>

We can now try run queries on the cluster.

mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | proxysql | +--------------------+ 2 rows in set (0.00 sec) mysql> select @@hostname; +------------+ | @@hostname | +------------+ | node3 | +------------+ 1 row in set (0.00 sec) mysql>

We can see that we have visibility on testdb a database we had assigned the rights to in the galera setup. We can also confirm that we are getting the responses from node3 of the galera cluster.

2.8 Simulate node failure

We finally have to test what happens when a node goes offline. Shut down MariaDB service on one of the nodes and check the status on ProxySQL admin interface:

Admin> SELECT hostgroup,srv_host,status FROM stats.stats_mysql_connection_pool; +-----------+--------------+---------+ | hostgroup | srv_host | status | +-----------+--------------+---------+ | 1 | 172.24.7.17 | ONLINE | | 1 | 172.24.7.18 | ONLINE | | 1 | 172.24.7.19 | SHUNNED | +-----------+--------------+---------+ 3 rows in set (0.00 sec)

The status for one of the nodes is SHUNNED, which means that the host is temporarily unavailable. Upon restarting MariaDB service on the node, the status changes back to online on the ProxySQL management interface. That means that you can now send read/write queries to the node once again.

3- Setup Keepalived

First we install keepalived package:

apt install keepalived

Edit “/etc/sysctl.conf” and append this line to allow system to bind on virtual IP.

net.ipv4.ip_nonlocal_bind = 1

Reload system configuration.

sysctl -p

Create “/etc/keepalived/check_scripts/check_db” file which will check database for hostname of master node and return 0 if the current hostname is equal to master node. We will use it as health monitor of Galera cluster and force keepalived to bind VIP to master node.

mkdir /etc/keepalived/check_scripts/

cat > /etc/keepalived/check_scripts/check_db << EOF

#!/bin/bash

mysql_host="$(hostname)";

mysql_user="${1}";

mysql_pass="${2}";

node_response=$(mysql -h localhost -u ${mysql_user} -p${mysql_pass} -P 6033 -e "SHOW GLOBAL VARIABLES;" | grep "wsrep_node_name" | awk '{ print $2 }');

if [ "${node_response}" == "${mysql_host}" ]

then

exit 0;

else

exit 1;

fi

EOFSet executable permission for created file.

chmod +x /etc/keepalived/check_scripts/check_db

Create a user for keepalived_csdb to check cluster health. So login to Database by executing “mysql -u root -p” and after user creation exit from Database. (Note that, Create user only in first database, NO need to repeat this step for other servers.)

CREATE USER '<keepalived_user>'@'localhost' IDENTIFIED BY '<keepalived_password>';

On controller01:

Edit “/etc/keepalived/keepalived.conf” file and replace all its content with lines below.

global_defs {

router_id LVS_PRO

}

vrrp_sync_group G1 {

group {

eth0

}

}

vrrp_script chk_mysql {

script "/etc/keepalived/check_scripts/check_db "$USER" $PASS"

interval 1

}

vrrp_instance eth0 {

interface eth0

state MASTER

priority 100

virtual_router_id 156

advert_int 1

authentication {

auth_type PASS

auth_pass vd567

}

unicast_src_ip 172.24.7.17

unicast_peer {

172.24.7.18

172.24.7.19

}

virtual_ipaddress {

172.24.7.20

}

track_script {

chk_mysql

}

}

A function named “chk_mysql” will check proxysql availability and keepalived will release virtual IP if proxysql goes down.

In above we set interfaces as “MASTER” with “priority” of 100. In second and third keepalived we set interfaces as “BACKUP” with “priority” of 50 and 49. So if an incident occurs, keepalived will failover to the second or third node. If the problem was resolved on first node, keepalived with fallback to the first node.

The authentication part of configuration should be same on both load balancers.

On controller02:

Edit “/etc/keepalived/keepalived.conf” file and replace all its content with lines below.

global_defs {

router_id LVS_PRO

}

vrrp_sync_group G1 {

group {

eth0

}

}

vrrp_script chk_mysql {

script "/etc/keepalived/check_scripts/check_db "$USER" $PASS"

interval 1

}

vrrp_instance eth0 {

interface eth0

state BACKUP

priority 50

virtual_router_id 156

advert_int 1

authentication {

auth_type PASS

auth_pass vd567

}

unicast_src_ip 172.24.7.18

unicast_peer {

172.24.7.17

172.24.7.19

}

virtual_ipaddress {

172.24.7.20

}

track_script {

chk_mysql

}

}

On controller03:

Edit “/etc/keepalived/keepalived.conf” file and replace all its content with lines below.

global_defs {

router_id LVS_PRO

}

vrrp_sync_group G1 {

group {

eth0

}

}

vrrp_script chk_mysql {

script "/etc/keepalived/check_scripts/check_db "$USER" $PASS"

interval 1

}

vrrp_instance eth0 {

interface eth0

state BACKUP

priority 49

virtual_router_id 156

advert_int 1

authentication {

auth_type PASS

auth_pass vd567

}

unicast_src_ip 172.24.7.19

unicast_peer {

172.24.7.17

172.24.7.18

}

virtual_ipaddress {

172.24.7.20

}

track_script {

chk_mysql

}

}

On ALL Nodes, Configure keepalived to start on each boot and restart it.

systemctl status keepalived systemctl enabled keepalived

How to tested keepalived .

ip addr | grep "inet" | grep "eth0"

inet 172.24.7.17/32 scope global eth0

inet 172.24.7.20/20 brd 172.24.7.255 scope global eth04- Setup K3s

On AF-PROD-RNPR01 we install K3s master:

curl -sfL https://get.k3s.io | INSTALL_K3S_VERSION=v1.21.5%2Bk3s1 K3S_DATASTORE_ENDPOINT='mysql://PROXYSQL_USER:PROXYSQL_PASSWORD@tcp(KEEPALIVED_FLOATING_IP:6033)/DATABASE_NAME' sh - server

to join the two other nodes to master we need TOKEN. we can find it by:

cat /var/lib/rancher/k3s/server/node-token

then we issue the following command on the two other nodes:

curl -sfL https://get.k3s.io | INSTALL_K3S_VERSION=v1.21.5%2Bk3s1 K3S_DATASTORE_ENDPOINT='mysql://PROXYSQL_USER:PROXYSQL_PASSWORD@tcp(KEEPALIVED_FLOATING_IP:6033)/DATABASE_NAME' K3S_TOKEN='TOKEN FROM PREVIOUS COMMAND' - server

Add K3s certificate to system’s known certificates:

SERVER="127.0.0.1:6443"

openssl s_client -showcerts -connect $SERVER </dev/null 2>/dev/null | openssl x509 -text | sed -ne '/-----BEGIN CERTIFICATE-----/,/-----END CERTIFICATE-----/p' > cert.crt

cp cert.crt /usr/local/share/ca-certificates/${SERVER}.crt

update-ca-certificates5- Install Helm:

we have to get the latest version of helm from:

https://github.com/helm/helm/releases

currently the latest version is: 3.7.0

wget https://get.helm.sh/helm-v3.7.0-linux-amd64.tar.gz

then we unpack it and make it executable:

tar -xzvf helm-v3.7.0-linux-amd64.tar.gz mv linux-amd64/helm /usr/local/bin/helm && chmod +x /usr/local/bin/helm

6- Install Rancher

6.1 Add the Helm Chart Repository:

helm repo add rancher-latest https://releases.rancher.com/server-charts/latest

Create a Namespace for Rancher:

kubectl create namespace cattle-system

6.2 Install cert-manager:

# If you have installed the CRDs manually instead of with the `–set installCRDs=true` option added to your Helm install command, you should upgrade your CRD resources before upgrading the Helm chart:

kubectl apply -f https://github.com/jetstack/cert-manager/releases/download/v1.5.1/cert-manager.crds.yaml

# Add the Jetstack Helm repository

helm repo add jetstack https://charts.jetstack.io

# Update your local Helm chart repository cache

helm repo update

First we check if helm CRDs has been installed or not:

kubectl get crd

if it has been installed we issue the following command with installCRDs=false, else we use installCRDs=true

# Install the cert-manager Helm chart

KUBECONFIG=/etc/rancher/k3s/k3s.yaml helm install cert-manager jetstack/cert-manager \ --namespace cert-manager \ --create-namespace \ --version v1.5.1 \ --set installCRDs=false

Once we’ve installed cert-manager, you can verify it is deployed correctly by checking the cert-manager namespace for running pods:

kubectl get pods --namespace cert-manager

6.3 Install Rancher:

KUBECONFIG=/etc/rancher/k3s/k3s.yaml helm install rancher rancher-latest/rancher \ --namespace cattle-system \ --set hostname=rancher.tuxtips.net \ --set bootstrapPassword=admin

Wait for Rancher to be rolled out:

kubectl -n cattle-system rollout status deploy/rancher Waiting for deployment "rancher" rollout to finish: 0 of 3 updated replicas are available... deployment "rancher" successfully rolled out

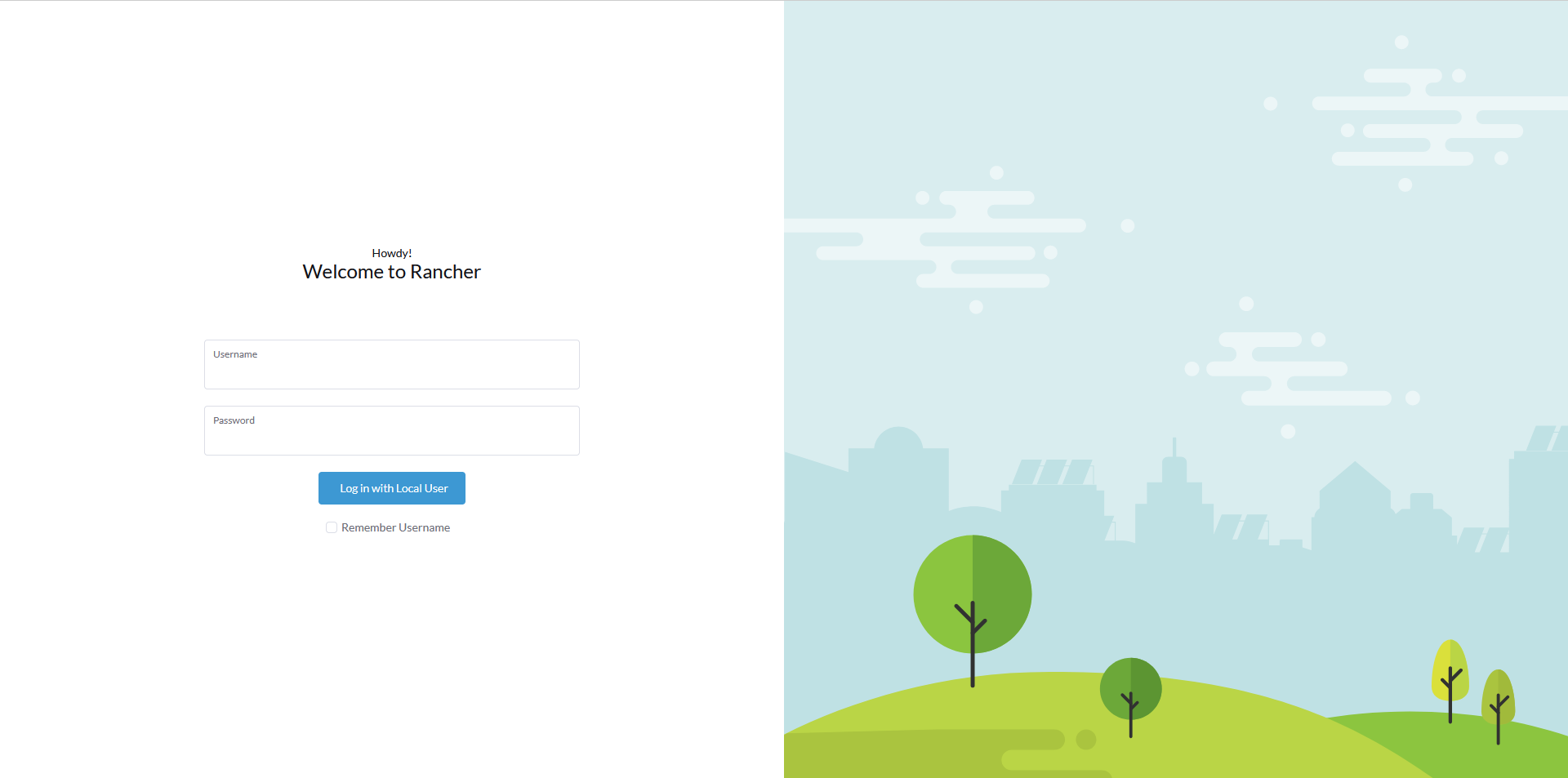

Now we can access Rancher UI by heading to the following address in any web browser:

https://rancher.tuxtips.net

IMPORTANT: if we access Rancher UI by using its IP address instead of FQDN, we may face the following error:

404 page not found

2.1 Add ProxySQL repository

It looks like the 2.1 section doesn’t work anymore…

This page: https://proxysql.com/documentation/installing-proxysql/ is hinting that we now must use vesrion 2.2

apt-get install -y lsb-release

wget -O – ‘https://repo.proxysql.com/ProxySQL/repo_pub_key’ | apt-key add –

echo deb https://repo.proxysql.com/ProxySQL/proxysql-2.2.x/$(lsb_release -sc)/ ./ | tee /etc/apt/sources.list.d/proxysql.list

apt-get update

apt-get install proxysql

Hello,

thanks for sharing this setup guide.

In my understanding the HA cluster consists of 4 nodes:

– node A: Galera Cluster node 1, k3s node 1

– node B: Galera Cluster node 2 k3s node 2

– node C: Galera Cluster node 3, k3s node 3

– node D: ProxySQL server

If this is correct, node D is a single point of failure (SPOF), right?

If this is true, why don’t you setup a Rancher HA cluster with embedded DB (https://rancher.com/docs/k3s/latest/en/installation/ha-embedded/)?

There are only 3 nodes and mariadb, proxysql and the other packages have been installed on these 3 nodes. so if one of them goes down, the two other operates normally and there is no single point of failure

To wich IP should point the DNS name rancher.tuxtips.net? The floating IP 172.24.7.20?